Resources to help identify existing evidence or collect new evidence to evaluate how well each Solution Option meets your Evaluation Criteria

To assess how well each Solution Option you are considering meets your stated Evaluation Criteria, you will need common measures to evaluate the options against each criterion. For example, if the criterion relates to improving performance on a standardized test, the evaluation measure might be “score on standardized test” or “gain in score on standardized test since last Academic Year.”

DecisionMaker® suggests ways to evaluate Solution Options against many Evaluation Criteria but, if you need additional ideas, the resources listed below may be helpful in finding existing data or collecting your own data.

Some examples of existing information you can gather to evaluate Solution Options include:

- Efficacy studies or process evaluations published in peer-reviewed journals or other publications

- Information from the program vendor

- Data collected on outcomes in places where the Solution Options have been implemented previously, either in your own context or in other contexts

You may also choose to collect your own information by:

- Piloting Solution Options and surveying teachers or students for feedback

- Collecting data on outcomes

- Holding focus groups with stakeholders to understand buy-in

If you don’t have much time before a decision must be made, you may need to rely on data from other contexts such as other districts or universities and use this to make an informed estimate of what results you could expect in your own context. Your confidence in being able to replicate the results from another location in your own location might be affected by factors such as the similarity of conditions and populations served, or whether the Solution Option was used in multiple locations and performed similarly across all of them. The Relevance and Credibility section below provides more guidance about this.

If no relevant data are available or can be collected within your timeframe for making the decision, you could elicit professional judgments from experienced staff members about how well each Solution Option will perform against each criterion. For example, you could ask Counselors to review college planning software intended for high schoolers and ask them to estimate the percentage increase in high schoolers in your district who will apply to college if you implemented each of several alternative software options in your high schools.

In some cases, decision-makers may want to use available evidence to evaluate Solution Options against Evaluation Criteria. However, decision-makers may struggle to determine whether the available evidence is relevant or even appropriate to use in their context.

Additionally, in the particular case of assessing evidence of effectiveness, prior studies may exist, but these studies are likely to vary in quality or credibility. Decision-makers may want to consider the results of a less rigorous study to assess whether a Solution Option is effective, but may want to account for the fact that the study could be over- or under- estimating the size of the impact.

To account for these issues, DecisionMaker® provides two optional indices to evaluate existing evidence: a Relevance Index and a Credibility Index.

The Relevance Index helps decision-makers determine how well a study’s findings apply to their own setting by assessing the extent to which various characteristics of the study population and context are similar to the decision-maker’s own context. The Credibility Index helps decision-makers assess how seriously to take the findings of a study by scoring it on the quality of study design. Click here » to access the Relevance and Credibility Indices.

Click below to download the RI and CI Worksheet in your preferred format.

The RI and CI template is also available as an Excel workbook that calculates the relevance and credibility indices automatically. For a more detailed explanation of the calculations, technical guidance is available. Click below to download the Excel template.

We have created a four-part video tutorial to help practitioners use the Relevance and Credibility Indices. See the Relevance and Credibility Index Tutorials page in DecisionMaker® to learn more.

Once you have identified the measures you will use to assess each Solution Option against each Evaluation Criterion and have the data you need to do so in hand, you will need to assign values on each measure for each Solution Option and enter these values into DecisionMaker®.

Data must be collected in a numerical form in order for DecisionMaker® to calculate a utility value, e.g., points on a 0-10 scale, percentage points, hours per week, days per year, or rubric scores. If you have qualitative data, for example, rubric ratings of “Meets grammar standards to the highest expected level,” “Meets most grammar standards at an acceptable level,” etc., you will need to assign a numerical score to each rubric rating.

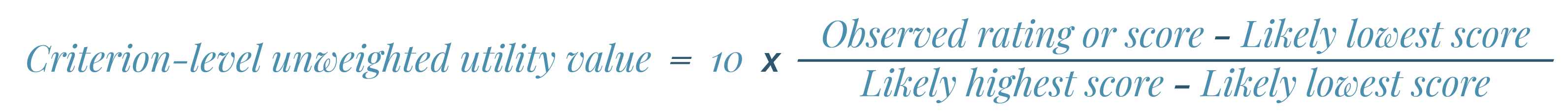

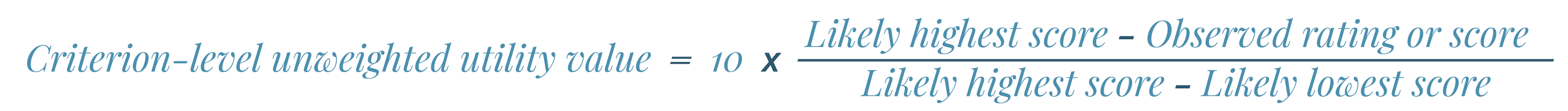

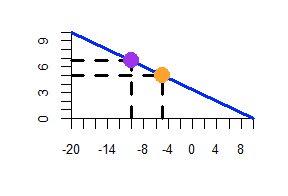

DecisionMaker® uses your expectations about likely best and worst case scenarios with respect to each evaluation measure to establish the high and low bounds of utility. The best case scenario you provide is set at 10 out of 10 for utility, assuming that you will be totally satisfied if a Solution Option performs at this level. Similarly, the worst case scenario is set at 0 out of 10 for utility, assuming you will be very dissatisfied with a Solution Option that performs at the lowest likely level. For example, if the evaluation measure is a test of reading comprehension scored between 0 and 40, you would enter the lowest score you think a student in the decision context might feasibly earn, and the highest score. If the population you are trying to serve is struggling readers, you might enter a likely lowest score of 10 and a likely highest score of 25. If they are your advanced readers, the range might be 30-40. These ranges might be based on your past experiences reviewing data from this test, or based on benchmarks provided by the test’s developer/vendor.

For some evaluation measures such as test scores, graduation rate, or time on task, you are likely to prefer higher values. For others, such as days of absence, dropout rate, or suspensions, you are likely to prefer lower values. DecisionMaker® asks you to indicate whether higher values are better for each Evaluation Criterion in order to establish whether utility increases for you and your stakeholders as the values go up or down.

The free, online resources listed below can be used to help find existing evidence on educational programs, strategies and tools, or to help you produce new evidence to evaluate your Solution Options. Using Evidence to Strengthen Education Investments » is a useful introduction to thinking about evaluating educational programs and strategies.

+ Resources to help you conduct your own evaluations of Solution Options

- Best Practices in Survey Design from Qualtrics » Some tips on how to design questions for your own surveys.

- California Evidence-Based Clearinghouse » Provides descriptions and information on research evidence on child welfare programs, and guidance on how to choose and implement programs.

- Digital Promise’s Ed Tech Pilot Framework » Provides an 8-step framework for district leaders to plan and conduct pilots on educational technology products, along with research-based tools and resources, and tips collected from 14 districts.

- LearnPlatform’s IMPACT™ » Rapid-cycle evaluation technology to evaluate edtech usage, pricing, and effectiveness on student achievement. (Fee-based)

- Mathematica’s e2i Coach » Facilitates the design and analysis of evaluations of educational programs/strategies and the interpretation of the results using data on the group using the program/strategy and a comparison group.

- Practical Guide on Designing and Conducting Impact Studies in Education » A guide from American Institutes for Research (AIR) about designing and conducting impact studies in education. This guide can also help research users assess the quality of research and the credibility of the evidence it produces.

- RCT-Yes » Facilitates the estimation and reporting of program effects using randomized controlled trials or other evaluation designs with comparison groups. Note that users need to download and install the software as well as R or Stata.

- Regional Education Laboratories »Regional Education Laboratories (RELs) can serve as a “thought partner” for evaluations of educational programs or initiatives. They also offer training, coaching, and technical support (TCTS) for research use “in the form of in-person or virtual consultation or training on research design, data collection or analysis, or approaches for selecting or adapting research-based interventions to new contexts.”

+ Resources to help you find existing evidence on educational programs, strategies and tools

- Best Evidence Encyclopedia (BEE) » Provides summaries of scientific reviews produced by many authors and organizations and links to full texts of each review.

- Campbell Collaboration » Provides systematic reviews of research evidence.

- Cochrane Library » Provides systematic reviews of intervention effectiveness and diagnosis tests related to public health in evaluation settings.

- Digital Promise Research Map » Provides links to reviews and articles of educational research on 12 teaching and learning topics, and organizes them in Network View and Chord View to show connections among them.

- ERIC » Education Resources Information Center. An online library of education research and information, sponsored by the Institute of Education Sciences (IES) of the U.S. Department of Education.

- ERIC for Policymakers – A Gateway to Free Resources » Webinar recording of an April 16, 2019 presentation by ERIC staff.

- Evidence for ESSA » Free database of math and reading programs that align to the ESSA evidence standards.

- Google Scholar » You can search by key word for scholarly publications on any topic including peer-reviewed articles, evaluation reports, and white papers. These have not been vetted by Google, so you will need to carefully assess the sources and credibility of any documents you find here. Note that peer-reviewed publications often prefer that authors avoid naming specific programs and products. Also, some peer-reviewed journals may be behind a paywall. Sometimes you can find a free, pre-print version of the same paper on the internet by copying and pasting the title of the paper into your search engine.

- Regional Education Laboratories » School districts or State Education Agencies can contact their REL for help with gathering evidence on educational strategies and programs. Upon request, RELs will perform reference searches and informal reviews of the existing research on interventions, and/or of specific studies against What Works Clearinghouse standards.

- What Works Clearinghouse » Provides reviews of existing research on different programs, products, practices, and policies in education, and summaries of findings.